NVIDIA Unveils Groundbreaking GH200 Chip for AI Advancements

Nvidia last month led the presentation of their new chip, the GH200. This novel innovation is particularly applicable to training and deploying AI systems and provides new ways for making the field of intelligence smarter. With GH200, you can expect the power and performance of generative AI to be on another level, enhanced exponentially over the previous models.

NVIDIA is opening the beta program for a next-generation platform dubbed Grace Hopper. This pioneering platform incorporates the world’s forthcoming HBM3e processors, enabling computing and AI innovations to flourish. AI and HPC workloads will be addressed faster and better thanks to the outstanding and multitasking capacities of the GH200. This is indeed an exciting revolution because it gives you the opportunity to fully utilize technology intelligence and enhance technology at the same time.

NVIDIA’s Impact and Reputation

Since its inception in 1993, NVIDIA has blazed a new trail in the graphics processing units (GPU) sector. It has thus contributed to 3D modeling in the gaming, scientific, and, most recently, artificial intelligence fields. Involvingly, Nvidia has proven itself to be at the forefront of the technology and computing fields since its birth.

Through a process of trial and error, the enterprise has earned the reputation of being a pioneer that invented systems capable of moving forward the frontiers of the purpose of GPUs. NVIDIA, which is now a winning brand in the gaming sector, has associated itself with outstanding graphics performance. Incredulously, NVIDIA is considered a dominant force in the market for graphics cards on a global scale.

In the realm of AI, NVIDIA’s input can be observed through its GPU architectures, hence Tesla, Volta, and Turing. Encompassing these infrastructures enabled the researchers and developers to produce sophisticated models and systems with a greater level of insight.

NVIDIA is concerned about outrunning limits submitted by their research and development activities. The enterprise devotes a huge amount of resources to the development and improvement of machine learning and artificial intelligence through joint initiatives with institutions and relevant industries. This unshakeable dedication to research and progress has, from the beginning, helped the NVidia company stand out as one of the AI players.

The 2019 premiere of the NVIDIA GH200 has displayed the company’s part in pursuing the most advanced technologies. This newest chip has advanced AI computing capabilities, which has further supported NVIDIA as a venue leader in both the AI and GPU industries.

Through NVIDIA’s progress, it can be seen they are serious about further growth and development. Their legacy can boast the advancement of computing and AI. With this recent announcement, their reputation is even more enhanced.

Announcement of GH200 Chip

Nvidia’s next-generation GH200 Grace Hopper architecture has been announced as the perfect computational structure for the epoch of computing and generative AI. Since you are someone in technology, you should pay attention to this update because of the possibility that it will revolutionize intelligence.

The GH200 comes with the HBM3e chip, which is specifically designed for AIG handling. It is a target for both NVIDIA and the AI ecosystem. This highly anticipated chip will be pushing forward the updates in language models, recommender systems, and other complicated AI applications. Using the GH200, you have a chance to look into the capabilities the AI-powered tools can offer you for your projects.

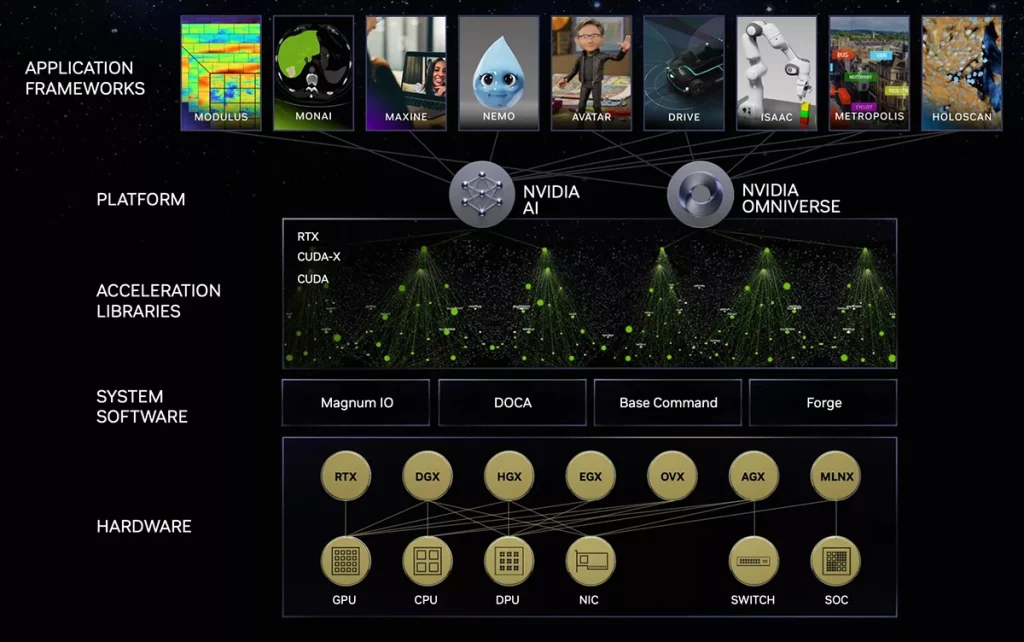

The GH200 platform can run the software stack developed by NVIDIA. It adheres to NVIDIA AI, the Omniverse platform, and RTX technology and thereby not only lets you work with existing tools but also helps to discover completely new capabilities of the Grace Hopper Superchip.

Besides that, the DGX GH200 supercomputer demonstrates what we can achieve with GH200 chips. With 256 CPUs as well as GPUs that are powered by Grace Hopper, the shared-memory capacity of this supercomputer is 144 TB, which is more than these NVIDIA setups. Such outcomes will contribute to the progress of performance as well as bring up frontier AI research and development.

In short, GH200 ushers in a leap in computing and generative artificial intelligence. It reveals NVIDIA’s true intention of being innovative and enabling the same generation of users to go further in their AI initiatives.

Technical Specifications of the GH200 Chip

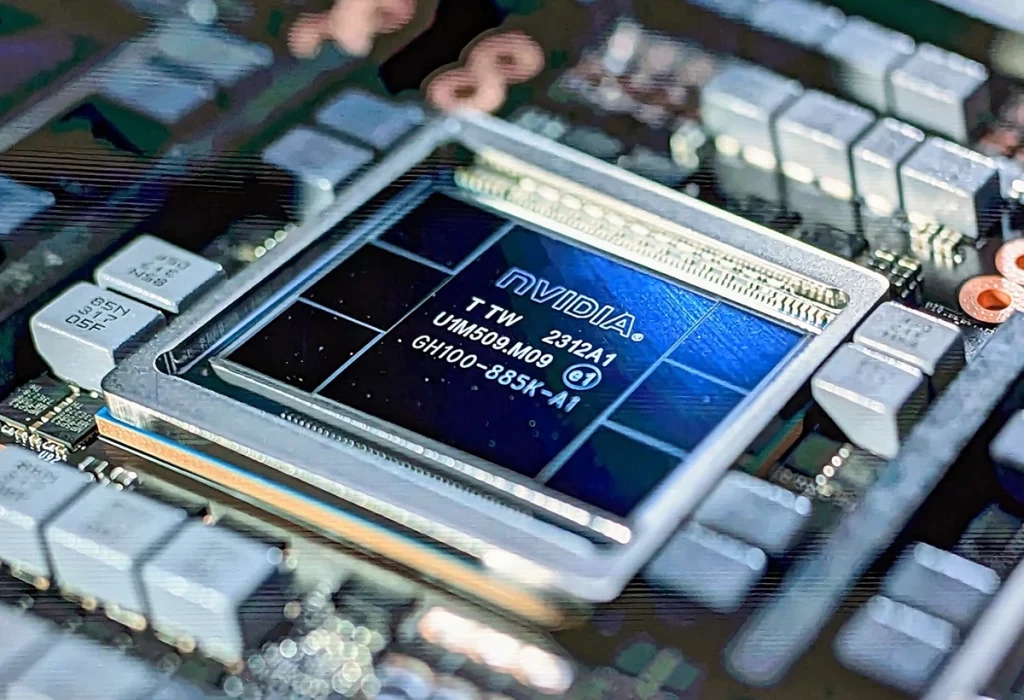

Chip Architecture

The GH200 architecture is based on the next-generation Grace Huber-based Superchip, which is from NVIDIA. It is based on HBM3e memory advancement and will provide excellent performance for your AI applications via memory. Collectively, 144 TB of shared memory within 256 nodes of NVIDIA Grace Hopper Superchips will allow you to create more detailed and intricate models that are orders of magnitude larger than before.

Performance Metrics

The projected capabilities of the GH200 chip are claimed to have an edge over other chips available on the market by a long way. The GH200 has some industry-leading AI components integrated within its GPU, like those currently available in the H100, which is NVIDIA’s highest-rated AI product on the market lately. While speed might be its feature, the operational memory tripled its capacity, granting systems that run on the GH200the ability to deal with huge amounts of data and solve complicated AI tasks. In addition to the increased memory capacity, the AI modeling creation and training procedures will also be enhanced, and there will be a higher level of effectiveness when AI models are used with generative AI techniques.

Energy Efficiency

Options concerning the efficiency of energy are also in the planning of GH200. It grants access to computing power without overburdening the energy supply chain of your organization, helping your organization reach its goal of sustainability. Having both high performance and power efficiency helps you implement the GH200 without having to interfere with your operational spending or environmental impact.

An insertion of the GH200 chip into your AI systems will lead to more productivity, cost-effective resource application, and a generally better experience across a variety of AI projects.

Implications for Artificial Intelligence

Training AI Systems

Nvidia’s GH200 chip launch brings in more prospects for training deep neural networks. In addition, now you can harness them to enhance the performance of your AI projects.

The profound architecture of the GH200 itself is a job well done. Bringing together an Arm-based NVIDIA Grace CPU and an NVIDIA H100 Tensor Core GPU within a package, both of which reach PCIe GPU connections, eradicates the need for them. This technique has a very positive impact on the AI models’ performance. accelerates the training process.

On the other hand, the system offers an increase in memory capacity as well. Consequently, this has cost savings and takes into consideration more complex AI model development, including language models or advanced recommender systems.

Application in AI Systems

Leveraging the power and efficiency of Nvidia’s GH200 brings benefits to AI systems:

- Swifter Inference: Integration of the NVIDIA Grace CPU together with the NVIDIA H100 Tensor Core GPU allows AI solutions to perform inference tasks adequately due to the capability of having their processing done quickly and accurately.

- Performance: Its cutting-edge architecture and novel features make GH200 chips the perfect solution to aid in the building of AI systems dedicated to handling the workload requirements of real-time processing.

- Minimal Latency: The C2C chip, incorporated into the GH200 with the NVLink, eases data processing and communication speed between CPU and GPU, which in turn decreases response delays and makes AI acceleration better.

Nvidia’s GH200 processor launch paves the way to further progress in the following areas: development, performance, and efficiency of AI systems in numerous industry and application spheres.

Understanding Market Perception and Trends

Once we understand the launch of Nvidia’s GH200 chip, it is essential to appreciate the market thoughts and trends related to this hardware AI.

It is clear that the arrival of the GH200 has been received with enthusiasm within the circle. This is because it has the capability to connect the hungry for effective and strong AI training and deployment with the abundant producers. In addition, the samples list the prominent players in the market, such as Google Cloud, Meta Platforms, and Microsoft, as being among the users of DGX GH200 for their AI workloads.

The trend of AI hardware on the market reveals the ever-growing revolution and progression in the AI field:

- The AI systems need to keep going.

- They place a high premium on the energy efficiency and efficacy of the large-scale production facilities.

- In the near future, more industries will take on the key role of AI and machine learning in their daily activities.

Nvidia’s GH200 chip represents a stride in addressing these trends. Built specifically for intelligence tasks this chip not delivers enhanced performance but also ensures accessibility, across diverse workloads and deployments.When considering the market perception it’s important to note the following;

The GH200 chip from Nvidia is a stride towards fixing these concerns. Designed particularly for tasks concerning intelligence, this kind of chip not only brings boosted performance but also the quality to be utilized by all kinds of workloads or deployment scenarios.

Nvidia has the status of being at the forefront of AI hardware solutions, and this chip is fully in line with that. The DGX GH200 supercomputer, which is based on this AI chip, shows us another Nvidia way of stretching the limits of AI and high-performance computing.

Knowing everything aboutthe impact of the the impact of the GH200 chip on market perception and the trends is a really important thing. Therefore, keep in mind the role of the chip in the industry and think about how AI evolves. Through constant learning about its progression and acceptance, you are able to acquire a complete impression of how it will impact the further development of AI technology.

Comparative Analysis

The Nvidia GH200 chip can bring about faster and more efficient AI training and application through its introduction. When you compare them to Nvidia processors, there are a couple of things that you can point out that make them very unique.

The GH200 chip, in stark contrast to its predecessors, was especially evolved to tackle generative AI tasks like language models and recommender systems. This turns it into the best product for developers who are inclined to work with AI.

Distinguishing from the NVLink-connected DGX, which boasts eight GPGPUs, the DGX GH200 comes alone as a 256-Grade Hopper CPU+GPU. A massive makeover for this performance, which exceeds expectations in every possible way. Due to that, your productivity level while dealing with AI systems and running various tasks will always increase.

The second difference is the memory capacity of 144TB of the GH200, or shared memory. This is a 500x increase from the shared memory in all previous Nvidia chips, enabling developers to construct and train models with much higher complexity and more data. The DGX-powered GH200 supercomputer was devised in order to provide a large number of computing resources, which brings its applications to the level of AI workloads that need a lot of memory.

To mention, the Grace Hopper superchip has more than 200 billion transistors, so it is a leading microprocessor for applications in AI. Using the GH200 chip in light of Nvidia’s products gives you an opportunity to have high performance and more functions.

In conclusion, while comparing NVIDIA’s GH200 to its past chips, you will enjoy a specialized AI chip that is efficient and powerful, mainly for AI models that are generative. A considerable progress in the CPUs and GPUs coupled with shared memory will enable you to form models of AI and address tasks that looked impossible or impracticable in the past.

Possible Concerns and Obstacles

While you delve into the GH200 chip made by NVIDIA, the most developed chip for the purpose of training and deploying AI systems, you should bear a number of concerns and challenges in mind.

One of the issues is that new technologies usually have a considerable impact on electric power consumption. The GH200 version is produced to carry out proportional sizes; by this being, it requires a particular amount of energy to work efficiently. In addition, one should be ready and ensure that there are plans for compensating for the increased power that the chip will require.

The hardware and software system is also an issue that you might face when you are working to support the implementation of the GH200. As the AI market leader and thus an industry trendsetter, Nvidia is always responsible for boosting performance and capabilities. Be mentally prepared to allocate your time and resources toward using AI in this revolutionary field.

However, they are not called just the above-mentioned, but there are other concerns that should be taken into consideration when dealing with high-level AI using the GH200. The aforesaid subject of consequences, including the misuse or abuse of AI systems, cannot be overlooked and should be addressed appropriately. It’s so important to recognize the problems and develop the mechanisms that would apply in case of arising issues.

The last thing that needs to be highlighted here is that the competition in the AI industry is usually in constant flux. Besides that, the GH200 chip by NVIDIA provides AI processing power, which someday may be improved by others and could cause challenges for its capabilities. Being conscious of the industry’s developments may make your GH200 investment decision more effective and strategic. By being mindful of these problems and challenges, it is possible that you will be able to make good decisions and also apply the GH200 chippowerfully to your AI projects.

conclusion

The main thing that should be pointed out is that the GH200 by NVIDIA demonstrates the dawn of a new era for computing and intelligence. Therefore, we can anticipate the appearance of high-performance AI tasks that will be useful for the business and scientific communities.

This breakthrough chip further proves NVIDIA’s mission to become the technology leader for the implementation and training of AI systems. Major internet technology companies such as Google Cloud, Meta, and Microsoft will be among the first to take advantage of the advanced functionalities featured in GH200. Such an approach will definitely contribute to the expansion of the number of options. Develop applications that may have been traditionally considered next to impossible or harder than before.

In the last paragraph, let me conclude that the NVIDIA GH200 is a huge leap forward in terms of computational power and artificial intelligence capabilities. Through the use of this chip, you can become an early adopter of emerging technologies and grasp the fantastic solutions the technology has to offer today.

Frequently Asked Questions

How does the GH200 compare to the H100?

The GH200 improves the level of preparedness and is a great improvement from the H100. It was created by NVIDIA as an engineering tool that is entirely aimed at executing complex AI as well as HPC tasks. Today, our GH200 chip was officially launched into production. This means that the application of AI systems in the area of high-performance computing will become more and more common.

What specifications does the DGX GH200 offer?

The technical specs of the DGX GH200, like its external design, target deep-learning class models that consume terabytes of data. Installed with 256 powerful discreet accelerator cards based on the architecture of the Grace Hopper mainframe and 144 terabytes of shared memory, the Orcale DGX GH200 is a scalable solution that is known for being able to handle the largest AI models. If you need more information, please have a look at the DGX GH200 datasheet.

How does the GH200 chip differ from the NVIDIA Grace CPU?

The NVIDIA Grace CPU, on the other hand, is a general-purpose processor created for varied workloads. Compared to creating the GH200 chip, which is a purpose-built AI and HSP chip, By doing this, it can become an ideal option that stands out from a CPU-based competitor.

What are some examples of generative AI applications that can be supported by the NVIDIA GH200?

While the NVIDIA GH200 is optimized for the purpose of AI acceleration, it can be used in a variety of AI cases, like recommender systems, generative AI models, and graph analytics, among others. Through this generation, AI researchers and professionals have better tools and means to focus on the challenges and solutions and progress towards new standards in performance.

Why do AI systems often choose NVIDIA chips like the GH200?

NVIDIA processors include the GH200, leading in architecture and performance for AI systems. This is why better performance for AI systems is evident. Their firm and constant attention to AI and HPC applications resulted in a line of chips that are highly efficient when it comes to tackling the needs and prompt conditions of complex cognitive tasks. Because of that, NVIDIA remains a leader in artificial intelligence for scientists and software developers.